I bought Nvidia shares in 2017 because it had clearly established itself as the leader in making high-performance chips for video game consoles like Xbox, PlayStation, and PCs.

It still makes graphics processors for gaming, but a lot has happened since then. Its chips became the go-to choice for cryptocurrency mining because of their speed, which positioned it perfectly to capture nearly a 90% market share of the chip market for artificial intelligence training and inference.

💵💰Don’t miss the move: Subscribe to TheStreet’s free daily newsletter💰💵

It’s been less of a transformation and more of an evolution.

Years ago, the company’s decisions to target the whole stack cleared the way to establish its AI foothold, making it more challenging for rivals like AMD to carve away market share despite rolling out chips optimized for AI.

As a result, Nvidia’s annual revenue surged from below $11 billion in 2020 to over $130 billion last year, while AMD’s revenue grew more slowly, from $9.8 billion to $26 billion.

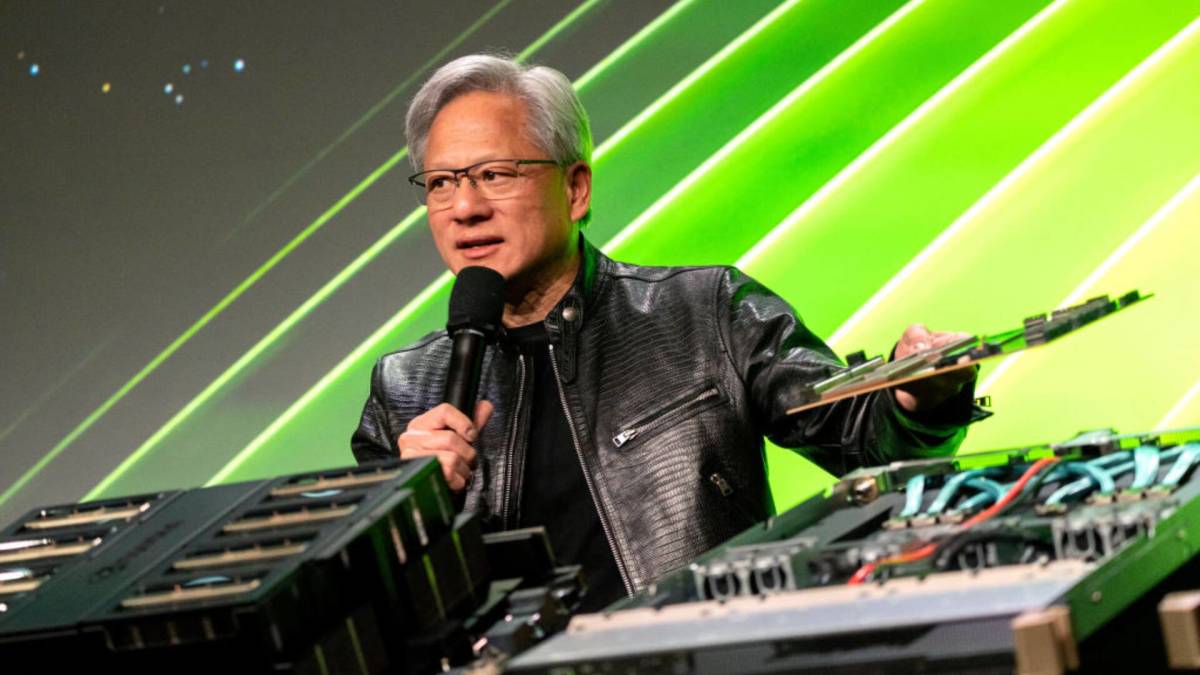

Jensen Huang, co-founder and chief executive officer of Nvidia Corp., has built an AI infrastructure company.

Jensen Huang, co-founder and chief executive officer of Nvidia Corp., has built an AI infrastructure company.

Image source: Morris/Bloomberg via Getty Images

Nvidia delivers a steady cadence of faster AI chips

Nvidia CEO Jensen Huang is releasing AI chip upgrades annually:

- 2022: H100. Built on Hopper architecture, this chip was the first to be widely embraced for accelerating large language models, or AI chatbots, like OpenAI’s ChatGPT.

- 2023: H200. Also built on the Hopper architecture, the H200 was 20% faster than the H100 in training AI and twice as fast for AI inference, thanks partly to more memory.

- 2024: GB200. The first AI superchip created on Nvidia’s Blackwell architecture, the GB200 combines two B200 Blackwell GPUs with a Grace CPU to deliver faster speeds and greater energy efficiency per query.

- 2025: GB300. Thanks to more cores and memory, the Blackwell Ultra delivers 1.5 times the performance of the GB200.

- 2026: R100. Based on Nvidia’s entirely new Rubin architecture, this chip will replace Blackwell and feature an ARM-based Vera CPU. Testing is happening this year, with a launch planned in 2026. It will offer up to 50 petaflops of FP4 (4-bit floating point) inference performance, more than double the 20 petaflops delivered by Blackwell.

AI infrastructure market could be worth ‘trillions’

Nvidia’s chips are essential to its dominance in AI infrastructure, but it’s not just these faster and more energy-efficient chips that are making it tough for rivals like AMD to dethrone it.

Related: A history of Nvidia as its market cap tops $4 trillion

In 2006, Nvidia launched its CUDA (Compute Unified Device Architecture) software to accelerate GPU performance. That forward-thinking move is paying big dividends today, because many of Nvidia’s biggest customers — hyperscalers like Amazon’s AWS and Alphabet’s Google Cloud — have written programs based on CUDA to optimize Nvidia GPUs in their server racks.

Nvidia has also built a deep moat in other ways. It has developed switches and interconnects that allow Nvidia-powered servers to work more efficiently.

For instance, in August, Nvidia launched Spectrum-XGS Ethernet, which allows data centers in different places to connect faster and with more stability than existing technology, creating what Nvidia CEO Jensen Huang calls “AI super-factories.”

“We have Spectrum-XGS, a giga scale for connecting multiple data centers, multiple AI factories into a super factory, a gigantic system,” said Huang on Nvidia’s Q2 conference call. “You’re going to see that networking obviously is very important in AI factories.”

Huang said on the conference call that its networking equipment can increase performance from “65% to 85% or 90%,” an improvement that effectively “makes networking free.”

The combination of chips, servers, networking switches, and interconnects will help Nvidia capture a share of what could become a multitrillion-dollar AI infrastructure market.

“Blackwell and Rubin AI factory platforms will be scaling into the $3 trillion to $4 trillion global AI factory build out through the end of the decade,” said Huang.

As more of its technologies are embedded within its customers (who are spending big money building Nvidia-centric networks), it becomes harder for rivals like AMD to displace it, despite AMD’s open-source approach to networking.

Nvidia’s networking revenue surges

AI chips – particularly Blackwell, generated the bulk of Nvidia’s $46.7 billion in revenue during the second quarter, but the networking business is also growing rapidly.

Related: 8 Quotes from Nvidia CEO Jensen Huang on what happens next

“Networking revenue was $7.3 billion, up 98% from a year ago and up 46% sequentially, driven by the growth of NVLink compute fabric for GB200 and GB300 systems, the ramp of XDR InfiniBand products, and adoption of Ethernet for AI solutions at cloud service providers and consumer internet companies,” wrote Nvidia in its second qwuarter 2025 10-Q filing with the Securities and Exchange Commission.

Last quarter, Nvidia saw strong demand across its Spectrum-X Ethernet, InfiniBand, and NVLink networking products.

Nvidia CFO Colette Kress said

“Spectrum-X Ethernet delivered double-digit sequential and year-over-year growth with annualized revenue exceeding $10 billion…InfiniBand revenue nearly doubled sequentially.”

The adoption of Nvidia’s non-chip solutions means that Nvidia has become more than a chip company; it is now an AI infrastructure powerhouse.

“There’s a lot of reasons why NVIDIA is chosen by every cloud and every start-up and every computer company,” said Huang. “We’re really a holistic full-stack solution for AI factories.”

Todd Campbell owns shares in Nvidia and AMD.

Related: Veteran Nvidia analyst drops blunt 4-word message on its future