Artificial intelligence skepticism was growing even before legendary hedge fund manager Michael Burry, a contrarian, decided to kick a hornet’s nest.

First, many experts have admitted that AI is a bubble, but as a consolation, we get the opinion that it is different from the dot-com bubble, or, in the case of a former Intel CEO Pat Gelsinger, that it will take years to burst.

Bank of America analysts usually point out that there will be no overbuilding of AI data centers, due to practical limitations such as access to power and data center space.

The issue of circular financing in the AI space was raised, but experts believe it is not as significant a problem as in previous cycles.

“In this circular economy, capital, infrastructure, and demand are kept inside the loop,” Sanjit Singh Dang wrote for Forbes. “The structure offers real advantages.”

And at JP Morgan Asset Management, Global Market Strategist Stephanie Aliaga and Investment Specialist Nicholas Cangialosi wrote:

“History reminds us that enthusiasm can run ahead of reality. Yet so far, today’s players are far better capitalized than those of the dot-com era, AI monetization is underway, and the risk of overbuilding seems limited in the near term.”

Burry’s entry into this arena sent a shock wave through the industry, as the SEC 13F filing from his hedge fund Scion Asset Management revealed he is shorting Nvidia (NVDA) and Palantir (PLTR).

However, Burry wasn’t done kicking this hornet’s nest; he was just getting warmed up.

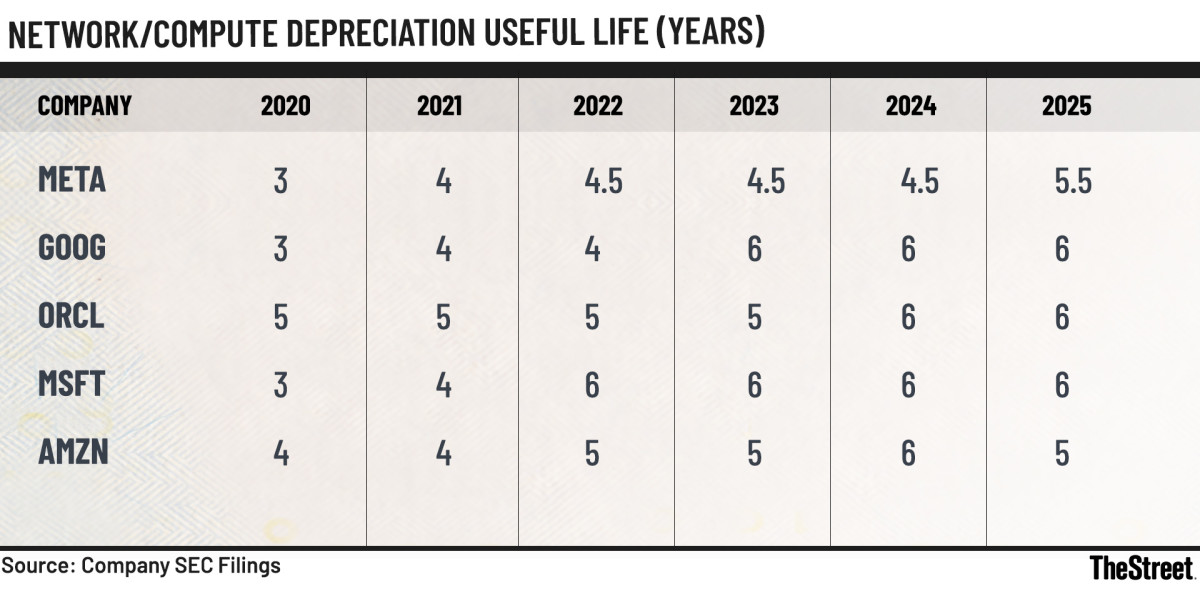

Network/Compute Depreciation Useful Life Table

Network/Compute Depreciation Useful Life Table

Company Filings

Burry claims AI hyperscalers are artificially boosting earnings

Burry wrote on November 10, in his post on X (the former Twitter):

“Understating depreciation by extending useful life of assets artificially boosts earnings —one of the more common frauds of the modern era. Massively ramping capex through purchase of Nvidia chips/servers on a 2-3 yr product cycle should not result in the extension of useful lives of compute equipment.”

The table above is based on data provided by Burry in his post, collected from SEC filings. It is evident that the useful life of hardware has increased by up to three years, depending on the company.

In business accounting, depreciation is a method used to allocate the cost of a purchased asset over the period during which the asset is expected to be in use. This means that if the useful life of equipment (think GPUs) is longer by three years, the company can spread out the cost of those GPUs by three more years, which directly and positively impacts earnings.

What Burry is alleging is that the useful life in numbers in the filings has grown over the years because companies figured out that the earnings would look better that way, not because the GPUs can work six years. That is a very serious allegation; let’s hope Burry can prove it.

Burry continued:

He estimated that by 2028, Oracle (ORCL) will overstate its earnings by 26.9% and Meta (META) by 20.8%, adding that he will release more details on November 25.

Could Burry be right about the AI accelerators’ life span?

In October 2024, Tech Fund posted on X that, according to an AI architect at Google, the lifetime of datacenter GPUs at current utilization levels can be three years at most.

It’s wise to be skeptical of these claims, as we have no way to verify the identity of the individual who claimed to be a Google employee. However, Tech Fund has a good reputation for its sources being reliable.

It will be interesting to see what Burry posts next, and if he has his own sources or is basing everything on this one source.

We also need to note that Meta released a study describing its Llama 3 model training. The model flop utilization rate of the cluster was approximately 38%.

Tom’s Hardware extrapolated from the study that because the annualized failure rate for GPUs is about 9%, their annualized failure rate in three years would be approximately 27%. Tom’s noted that it is likely that GPUs fail more often after a year in service.

While Meta’s study says the utilization rate of the cluster was 38%, we don’t know if this is a common practice.

For example, we can take CoreWeave and its recently revealed deal with Nvidia. As reported by Reuters, Nvidia will pay for all unused CoreWeave capacity (the unrented GPU capacity).

That CoreWeave strategy signals that it aims to have an extremely high utilization rate, which would shorten the cards’ lifespan even more quickly.

Let’s hope Burry has a whistleblower and isn’t just guessing.

Related: Bank of America resets price target as CoreWeave earnings send stock reeling