When you think of AI, the conversation invariably leads to Nvidia. The semiconductor giant is one of the biggest beneficiaries of the AI wave. It ended its fiscal year with $130.5 billion in revenues, which is more than double what it made in the previous year. Its data center division alone made $115.2 billion.

By the end of November, however, the market is posing a new question: what does “dominance” mean when your largest consumers are striving to need you less?

On one side, Meta Platforms is discussing spending billions on Google’s Tensor Processing Units, or TPUs. They may start by renting capacity next year and then use TPUs in their own data centers beginning in 2027.

Custom silicon and domestic AI processors are making Wall Street reconsider market “dominance” in this sector.

Custom silicon and domestic AI processors are making Wall Street reconsider market “dominance” in this sector.

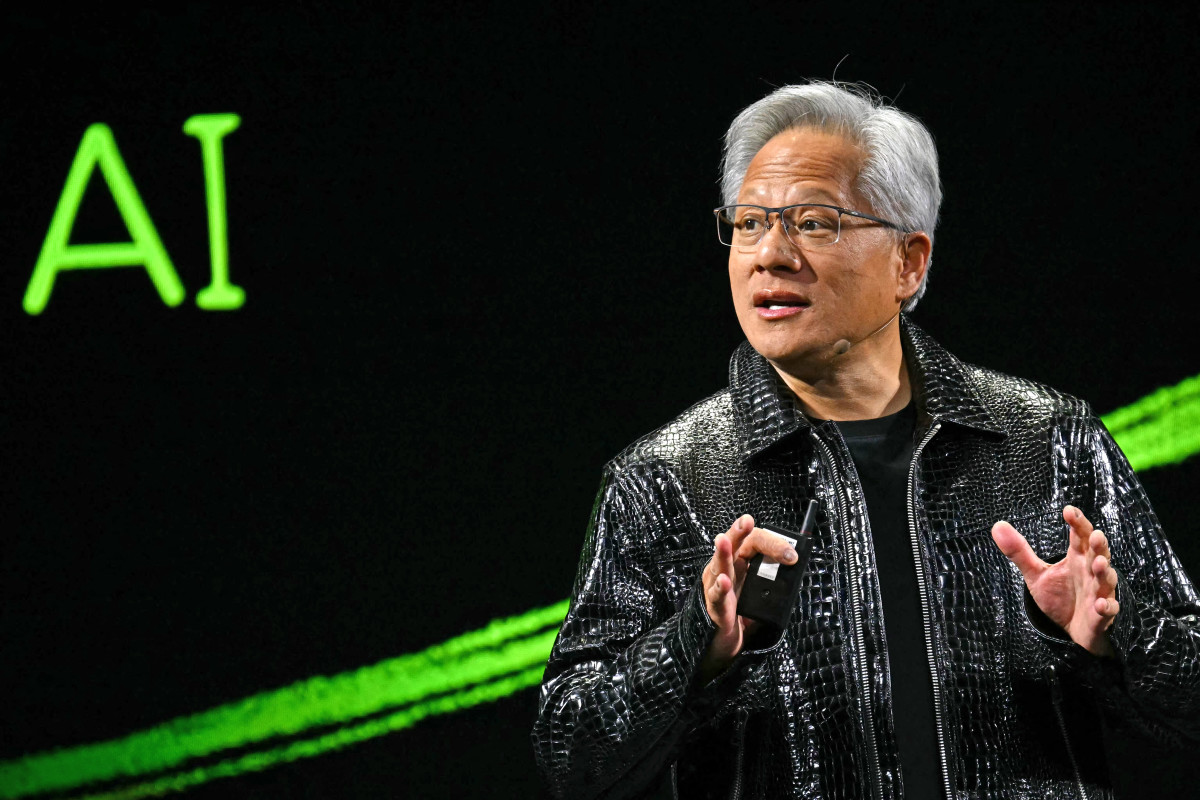

Photo by PATRICK T. FALLON on Getty Images

On the other hand, Baidu is launching a five-year plan for AI chips to fill the gap left by Nvidia in China, where U.S. export limitations are having a big impact.

Some estimates say that Nvidia still owns between 80% and 90% of the AI accelerator market, while others say it may be as high as 95%.

But Meta Platforms’ latest news shows how swiftly that fortress is being put to the test, from both ends of the global AI supply chain.

Meta’s Google chip talks show how fragile Nvidia’s dominance is

The main point of the first piece is straightforward and harsh: one of Nvidia’s major customers is seeking a new deal.

Several sources say that Meta is working on a contract that would allow it to:

- Rent Google Cloud’s TPUs starting in 2026.

- Beginning in 2027, put Google TPUs in its own data centers.

Meta has already revised its forecast for capital spending in 2025 to $70 billion to $72 billion, specifically to create AI data centers and servers. That is more than the previous range, and almost $30 billion more than what it spent in 2024 at the midpoint.

Related: Palantir’s AI push tests bears who doubt it can keep winning

It matters if even a small amount of that money goes from Nvidia to Alphabet’s processors.

- Nvidia still dominates the AI accelerator market, but a single hyperscaler like Meta may account for a mid-teens proportion of demand.

- Depending on the size and timing of the deployments, some estimates suggest that a Meta–TPU transaction may cost Nvidia up to 10% of its annual sales.

Markets have noticed. Nvidia’s market value has dropped by more than $700 billion since it briefly reached $5 trillion earlier this year. Alphabet’s stock has risen, bringing its market value closer to $4 trillion as Wall Street begins to assign a real financial value to TPU sales.

More Nvidia:

- Is Nvidia’s AI boom already priced in? Oppenheimer doesn’t think so

- Morgan Stanley revamps Nvidia’s price target ahead of big Q3

- Investors hope good news from Nvidia gives the rally more life

- Bank of America resets Nvidia stock forecast before earnings

- AMD flips the script on Nvidia with bold new vision

Morgan Stanley, for example, thinks that Alphabet may ship between 500,000 and 1 million TPUs per year by 2027. Every additional 500,000 units would contribute around 11% to Google Cloud’s revenue and 3% to Alphabet’s profits per share.

That doesn’t eliminate Nvidia from the game overnight. But it does shift the debate from “Is it possible for anyone to catch Nvidia?” to “How much of the AI hardware market will Nvidia share with its competitors?”

Baidu’s Kunlun chips turn Nvidia’s China problem into Baidu’s opening

If the conversations between Meta and Google are about diversifying, Baidu’s demand for chips is a matter of necessity.

U.S. export restrictions already prohibit Nvidia from sending its best GPUs to China. Reports say that Beijing has gone even further by telling people not to buy Nvidia’s less powerful H20 processors, which are available there.

Related: Why Netflix’s biggest hit could hit its bottom line

Recently, Chinese officials stopped ByteDance from using Nvidia chips in new data centers and told state-funded projects to only utilize AI processors made in China. This means that they had to use a local alternative.

That’s where Baidu’s Kunlunxin unit comes in.

At its Baidu World conference in November, the company unveiled:

- M100 AI chip focused on inference, set to launch in early 2026.

- M300 chip capable of both training and inference, planned for early 2027.

- New Tianchi 256 and Tianchi 512 “supernode” systems that network hundreds of Baidu chips to mimic the performance of large Nvidia clusters.

Analysts are beginning to back up that plan with actual numbers:

- JPMorgan thinks that Baidu’s sales of AI chips would expand by around six times, reaching about 8 billion yuan (about $1.1 billion) by 2026.

- Macquarie thinks that the Kunlunxin segment is worth around $28 billion on its own.

- Kunlunxin has already had orders from suppliers connected to China Mobile, one of the biggest phone companies in the nation. This shows that the company is starting to get business.

Chinese IT companies like Alibaba and Tencent are also warning investors that the problem for the next two to three years will be a lack of AI chips, not a lack of demand. That means that any reliable domestic chip provider has a market that is almost completely theirs.

The arithmetic isn’t good for Nvidia: China has been a big market for AI accelerators, but U.S. sanctions and Chinese industrial policies are making it hard for local companies to develop around it.

AI accelerator pie is exploding, and Nvidia still owns the biggest slice

Even with those problems, the AI chip industry as a whole is developing so quickly that Nvidia may lose market share and still make money.

A few important pieces of information:

- The data center GPU market is projected to grow at a 13.7% annual rate from $120 billion in 2025 to $228 billion in 2030, according to at least one estimate.

- One research group predicts $145.1 billion in AI GPU chip sales between 2024 and 2029, rising over 30% yearly.

- Alphabet, Microsoft, Amazon, and Meta spent almost $200 billion of a $290 billion data center infrastructure bill recently. That sum is likely to rise more than 40% in 2025 as they rush to expand AI capacity.

Within that surge, Nvidia remains the main beneficiary:

- It made $130.5 billion in sales in fiscal 2025, which is 114% more than the year before.

- S&P Global Ratings now thinks Nvidia might make $205 billion in sales in fiscal 2026 and $272 billion in 2027.

- Its primary operations have gross margins of 70% to 75%, which is quite high for the semiconductor industry.

In other words, the “arms race” is making the arms market bigger.

Related: A buried Nvidia warning could shake the entire AI buildout

The problem for Nvidia isn’t that the pie becomes smaller. It’s that the slices become more congested. TPUs are taking a bigger share at the top end of U.S. clouds, while Baidu (and Huawei) are taking a lot of the growth in China.

What this two-front chip war means for investors

Nvidia remains the best method to capitalize on the growth of AI infrastructure for now. But the Meta and Baidu tales show that the industry is more complicated for investors to watch:

- Nvidia: Still the leading AI infrastructure play, but hyperscalers may shift workloads to in-house and competition processors.

- Alphabet: TPUs are becoming a profit center; any Meta transaction will increase cloud economics and strengthen Google’s AI software and hardware dominance.

- Meta Platforms: Massive AI capex is about control and growth, and TPUs boost compute security and price leverage.

- Baidu: Kunlunxin presents Baidu with a unique opportunity to become China’s top AI chip manufacturer, benefiting from legislative tailwinds but facing execution and manufacturing challenges.

Big picture: Nvidia no longer has a “Nvidia killer.” Regional and vertical competitors are carving out a significant portion of the fast-growing AI hardware industry. Number-loving investors must watch how quickly Nvidia is expanding and how much Alphabet and Baidu discreetly drain off over the next several years.