Nvidia (NVDA) had another solid year, racking up wins in the AI race, but its latest move just added a major new chapter in its already illustrious growth story.

The AI behemoth quietly pushed out a massive new update to CUDA, one that’s being billed as the most significant shakeup since 2006.

CUDA, the software layer that gives Nvidia’s hardware its real-world punch, just got a lot easier to program, more efficient, and even tougher for its rivals to replace.

Though the update might not appear as flashy as an Nvidia GPU drop, it pushes it further ahead in the AI arms race, keeping developers locked into its incredibly robust ecosystem, while making every fresh GPU more valuable than the previous one.

Nvidia rolls out its biggest CUDA update in 20 years, tightening its lead in the AI race.

Nvidia rolls out its biggest CUDA update in 20 years, tightening its lead in the AI race.

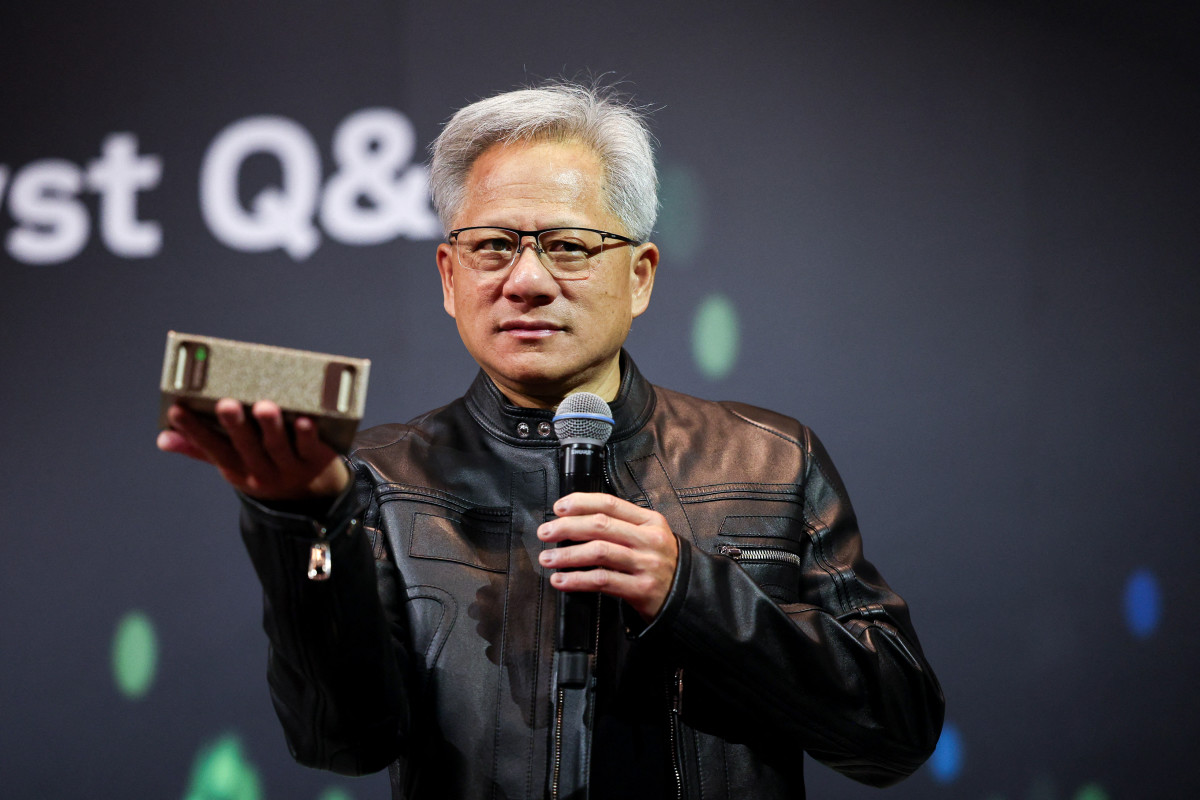

Photo by I-HWA CHENG on Getty Images

What is CUDA?

CUDA is basically Nvidia’s software layer that even the big tech aficionados sometimes tend to ignore.

It’s not hard to see why, though.

Every headline with regard to Nvidia is mostly about how many GPUs Nvidia is shipping, which makes it easy to forget the company actually does anything else.

At its core, CUDA allows programmers to efficiently tap into the tiny processing cores inside Nvidia’s sophisticated GPUs.

GPUs are phenomenal at doing a ton of small computing jobs all at once, and CUDA is the critical bridge that allows software to take advantage of that superpower.

Related: Morgan Stanley reveals eye-popping price target on Nvidia stock

To better explain what it does, imagine you’re running a bakery.

A CUDA-powered GPU is something like hiring 2,000 perfectly skilled bakers, each doing a pertinent small step over and over. CUDA is the system that coordinates everything in the entire kitchen, ensuring the cake comes out right each time.

That’s exactly the magic that has turned CUDA into a technology that turns Nvidia GPUs from gaming gear into engines for AI, video editing, weather models, and whatnot.

What CUDA adds to Nvidia’s stack

Hardware has made Nvidia richer; CUDA is what makes it sticky.

The platform is loaded with libraries, compilers, and tools that plug seamlessly into the programming languages developers already use.

So once a team builds out its AI or simulation stack around the platform, switching becomes almost impossible.

Related: Nvidia CEO pours cold water on the AI power debate

That lock-in yields Nvidia software-like margins (a 5-year gross margin of 67%), transforming a new GPU release into an entire ecosystem upgrade.

And when Nvidia controls somewhere between 70% and 95% of the AI-accelerator market (and some estimates run even higher), as Technology Magazine reports, it’s no surprise that CUDA becomes the default language of modern AI.

Nvidia’s biggest upgrade in 20 years just changed the game

Nvidia just unveiled what it’s calling the biggest upgrade to CUDA in years with the 13.1 update.

For something that flies under the radar in Nvidia’s arsenal, the update could have major implications for moving the needle on how AI gets built.

Nvidia engineers Jonathan Bentz and Tony Scudiero commented on the upgrade in a blog post.

The key change is the CUDA Tile, a brand-new model that changes how developers interact with Nvidia GPUs.

Instead of micro-managing thousands of tasks previously, programmers can now work with “tiles” of data, letting CUDA determine the ideal way to distribute that workload.

More Nvidia:

- Is Nvidia’s AI boom already priced in? Oppenheimer doesn’t think so

- Morgan Stanley revamps Nvidia’s price target ahead of big Q3

- Investors hope good news from Nvidia gives the rally more life

- Bank of America resets Nvidia stock forecast before earnings

- AMD flips the script on Nvidia with bold new vision

Key additions include:

- A new virtual instruction set (CUDA Tile IR) allows programmers to run code a lot more smoothly on Nvidia GPUs.

- cuTile for Python lets everyday Python developers write quicker, tile-based GPU code without diving deeper into the complex C++ territory.

- “Green contexts” is a smarter and efficient way to manage GPU power, so a variety of jobs get exactly the resources they need.

- Better Multi-Process Service tools keep different workloads from stepping on each other.

- Up to 4-times faster grouped matrix multiplies on Blackwell GPUs give AI models a marked increase in speed without tinkering with the hardware.

In short, Nvidia GPUs have gotten even easier to program, more efficient, and even stickier for developers to walk away from.

The update reminds me of a famous Jensen Huang quote, reported by TechRadar, that predicted the end of coding.

Related: Cathie Wood’s latest move is alarming for big stocks