Nvidia‘s next step isn’t merely to send out more Blackwell GPUs. It’s making it simpler to build, port, and keep up with the code on those chips.

Nvidia is making it harder to transfer hardware and easier to upgrade with CUDA 13.1’s Tile programming style. These are two ways to keep prices high and margins stable, even when export regulations and allocations change.

Nvidia’s year has been full of superlatives, including a record market valuation, lightning-fast growth, and an AI build-out measured in gigawatts. Investors aren’t worried about if the firm is in charge; they’re worried about whether that lead will last as policies change and competitors become louder.

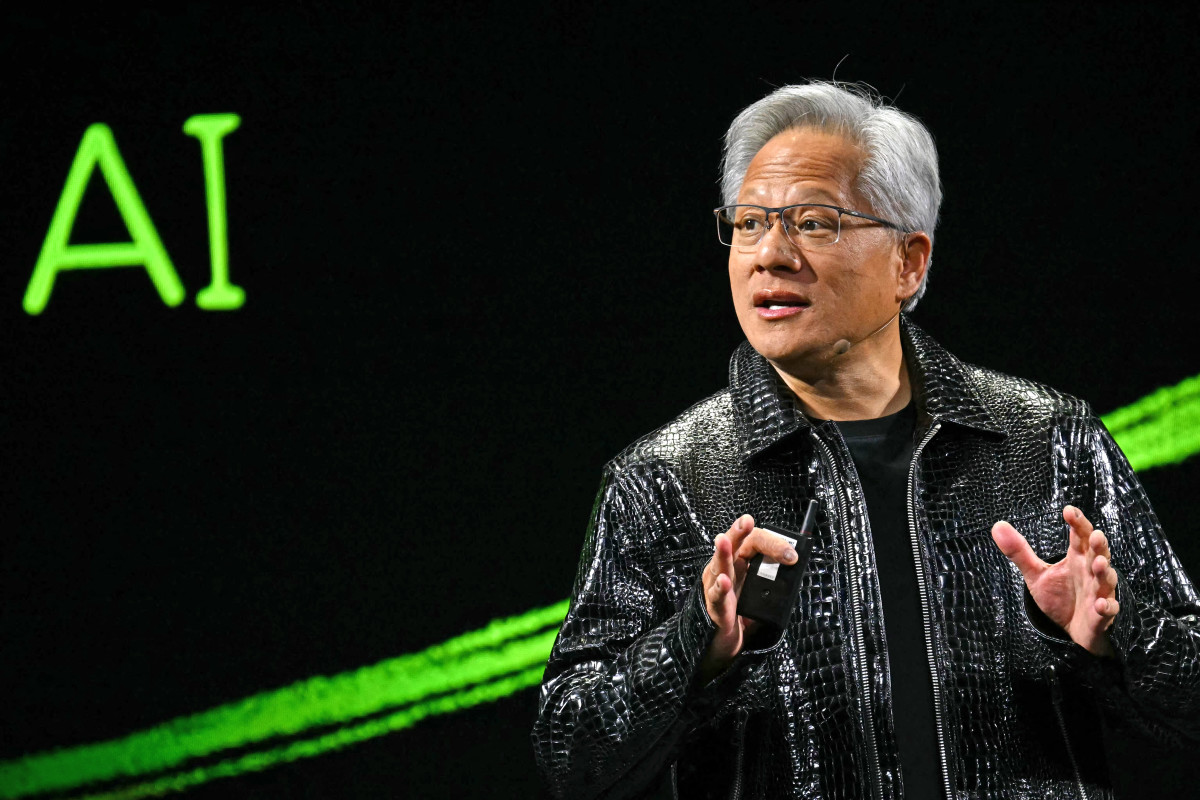

CEO Jensen Huang spelled it out.

The statement is used in political arguments, but it also matters for the stock: access will continue to be messy. Nvidia’s answer is to make sticking on its platform the safest choice for developers and CFOs.

That’s precisely what CUDA 13.1 does, especially with its Tile programming approach.

A new programming model quietly extends Nvidia’s lead.

A new programming model quietly extends Nvidia’s lead.

Photo by PATRICK T. FALLON on Getty Images

CUDA 13.1 moves Nvidia from making fast chips to making better software

CUDA 13.1 adds Tile, a higher-level programming approach for Nvidia graphics cards. Instead of hand-mapping hundreds of threads and re-tuning kernels every time a new architecture comes out, developers write in bigger tiles, which are chunks of data and arithmetic.

The Nvidia compiler and runtime take care of the low-level complexities, such as scheduling, thread dispatch, and tensor core mapping. Weeks of tweaking are turned into tools.

Related: Tesla has problem no one was pricing in

In practice, it means writing once and upgrading more quickly. Code that works well now can transition to Blackwell and beyond with a lot less “kernel surgery.”

You also have fewer problems that arise between generations. When the toolchain hides the idiosyncrasies of the hardware, performance cliffs are less likely to occur.

Most organizations won’t move, since it’s simpler to upgrade inside the Nvidia ecosystem than to try out a competitor’s stack. That’s not simply a speed moat; it’s a workflow moat.

Blackwell GPUs with the Tile programming model speed hardware upgrades

The prices on the market Nvidia makes the best hardware for AI. The programming paradigm and the developer experience are useful right now.

Businesses desire a quick jump from buying silicon to making it when it comes. Tile makes that hop shorter. Reduced manual rewrites result in faster GPU deployment, smoother validation, and fewer missed milestones.

Tile also grows with how big companies really function. Large teams would rather have predictable software optimization and performance tweaking than heroic fixes. CUDA 13.1 changes Blackwell upgrades from a rebuild to an acceleration by raising the amount of effort.

The programming model that lifts developer experience and programming efficiency

Benchmarks make the news. Developer experience is what gets budgets.

When teams code at the tile level, they may concentrate on algorithms and data flow instead of thread details. Tooling is also important. When profilers, debuggers, and libraries work well with Tile, it becomes easier to understand.

That cuts down on the expenses of onboarding and the danger of regression. Projects continue to progress, even after employees leave or contractors complete their work.

Related: AMD plans frustrating GPU chip change

When internal operators and automation programs use Tile semantics, it helps retain customers. Leaving Nvidia means more than simply switching chips.

Software optimization that shows up in margins: Being able to move software around offers pricing leverage.

Portability that supports pricing power and margins

Rewriting costs go down as the toolchain handles more of the hard work. Fewer rebuilds imply quicker deployment and earlier use.

Faster deployment helps keep prices stable. Customers pay for predictability and time-to-value when models ship sooner, even as supply improves.

Related: Palantir CEO is cashing in. Should you be nervous?

A large order book that lasts for many quarters is also worth more when customers may move allocations or upgrade to a new generation without having to rewrite the code. Less friction between “boxes arrive” and “workloads in production” helps keep gross margin steady as volumes grow.

If you’re predicting Nvidia stock prices beyond 2025, that software-aided margin durability should have its own line.

The workflow moat keeps the AI buildout on track

Exports will stay loud. Washington can make things harder for China. Washington can make it harder for China to obtain advanced GPUs while facilitating access for its allies.

Beijing can use “buy domestic” rules to its advantage. The Gulf and India can receive a lot of allocation by writing big checks. Every three months, a tug-of-war decides who gets chips.

Related: Why Netflix’s biggest hit could hit its bottom line

This change will alter the distribution of chips in a specific quarter. CUDA Tile does not make substations, HBM stacks, or wafers. When supply or licensing requires a change of route, it does make it easier to switch platforms.

If one corridor closes and another opens, customers can quickly switch to the next best Nvidia part. This acts as a shock absorber in the profit and loss statement. Geopolitics decides where hardware goes, and CUDA helps decide how quickly it turns into billable computation and recognized income.

Faster GPU deployment within the Nvidia ecosystem

You can see Tile’s mobility dividend in everyday use. Tile hides small changes, which makes validation cycles shorter.

After delivery, use ramps up faster. Clouds and businesses use capacity up faster, which helps them reach their revenue goals on time.

There are fewer fights over regression. Teams spend less time looking for thread-level bugs and more time improving models and data pipelines, from which real value is derived.

The promise of corporate software leaders isn’t just speed; it’s also dependability. That’s why the Nvidia ecosystem is a good standard to use.

In today’s competitive market, ease of adoption is a big plus

AMD and other companies are getting closer to each other when it comes to memory bandwidth and throughput. The next hill isn’t just more TOPS; it’s also how easy it is to use a lot of them.

A competitor needs strong hardware and a programming model that is simple for developers to use and will work with future versions of the software. They also need strong tools, a lot of libraries, and a lively AI community.

It is very important to match peak FLOPS. The hard part is matching the developer’s experience. Until then, Nvidia has the “least painful upgrade” lane, which is where most businesses spend their money.

Related: A buried Nvidia warning could shake the entire AI buildout

Tile helps money come in faster, from making chips to managing the supply chain.

There are still real limits on the amount of space, packaging, and HBM that is available. Tile can’t add units, but it can help you get money from units faster.

Smoother updates mean faster ramps when goods get there. When code is rewritten, there is less slippage, and converting backlog is more reliable.

That level of predictability is helpful in a supply chain that will be tight and hard to manage in different parts of the world.

Keep an eye on Nvidia stock and investor news

Instead of press releases, we should pay more attention to release notes. Frameworks, libraries, and OEM partners that show Tile-first pathways in changelogs show that adoption is happening. Another sign is that profilers and debuggers assume tiles by default.

- If GPU-hour costs go up more than expected as supply goes up, pricing power is about scarcity and ecosystem value.

- Think about unit delivery as well as “time to value” and “upgrade velocity.” These improvements should help speed up the process of moving from current-generation to Blackwell-class parts.

- There are worries about the concentration of hyperscalers. If more sovereign and enterprise transactions use Tile-centric integration, the moat grows beyond the Big Four.

The most important thing about AI and using technology

Wall Street often refers to Nvidia as the leading hardware company for AI development, and for good reason. The Tile programming method in CUDA 13.1 is what keeps it on top when the crown gets too heavy.

Nvidia leverages policy changes and competition noise to illustrate switching costs, allowing developers to focus on developing code that is compatible with all generations of Nvidia hardware, rather than modifying each individual thread.

More Nvidia:

- Nvidia makes a major push for quantum computing

- Nvidia’s next big thing could be flying cars

- Bank of America revamps Nvidia stock price after meeting with CFO

There are also dangers. Export limits could break up markets, packaging and HBM could slow down shipments, and new competitors will keep coming.

But investors might support a software-plus-silicon workflow moat that keeps margins high, speeds up deployments, and makes the huge order book more reliable.

If you own NVDA, you’re not just betting on the fastest chip. You’re also betting that it will be the easiest to order and make. If you’re not involved, keep an eye on the adoption trail.

If Tile continues to show up in OEM roadmaps and frameworks until 2026, Nvidia’s margin story has a second engine, and the competition still needs to make it.

Related: Jensen Huang just changed Nvidia: Here’s what you need to know