Nvidia (NVDA) CEO Jensen Huangused CES 2026 to draw a line where AI has been and where he feels it’s heading next.

In a CNBC sitdown from the show floor, Huang framed “physical AI” as the next major phase of intelligence.

It’s a clear break from the machines generating answers, but rather that can see, reason, and have real-world utility through robotics, autonomous systems, and edge computing.

Since the release of OpenAI’s ubiquitous ChatGPT, the AI race has centered on larger models, swifter training runs, and stronger benchmarks for text and images. Huang, though, believes they’re only solving part of the problem.

For AI to transition into the real world, it entails complex requirements in terms of reliability, context, and the ability to learn without compromising expensive and dangerous hardware.

At CES, Nvidia didn’t just talk up a single killer model.

Instead, it laid out an end-to-end stack covering everything from simulation, reasoning, orchestration, and edge compute.

Nevertheless, Nvidia stock is still treading water and is down 1% to $187.24 as of Jan. 6, from my last coverage.

Recently, AI and big-name tech stocks have fallen out of favor, but that could soon change amid the shift toward physical AI.

For perspective, according to Fortune Business Insights, robotics is shaping up to be a massive addressable market, with service robotics expected to reach $90.09 billion by 2032.

Additionally, the market for humanoid robots is forecasted to reach $6.5 billion by 2030. So, just a mid-single-digit slice of that market adds billions in incremental sales for Nvidia.

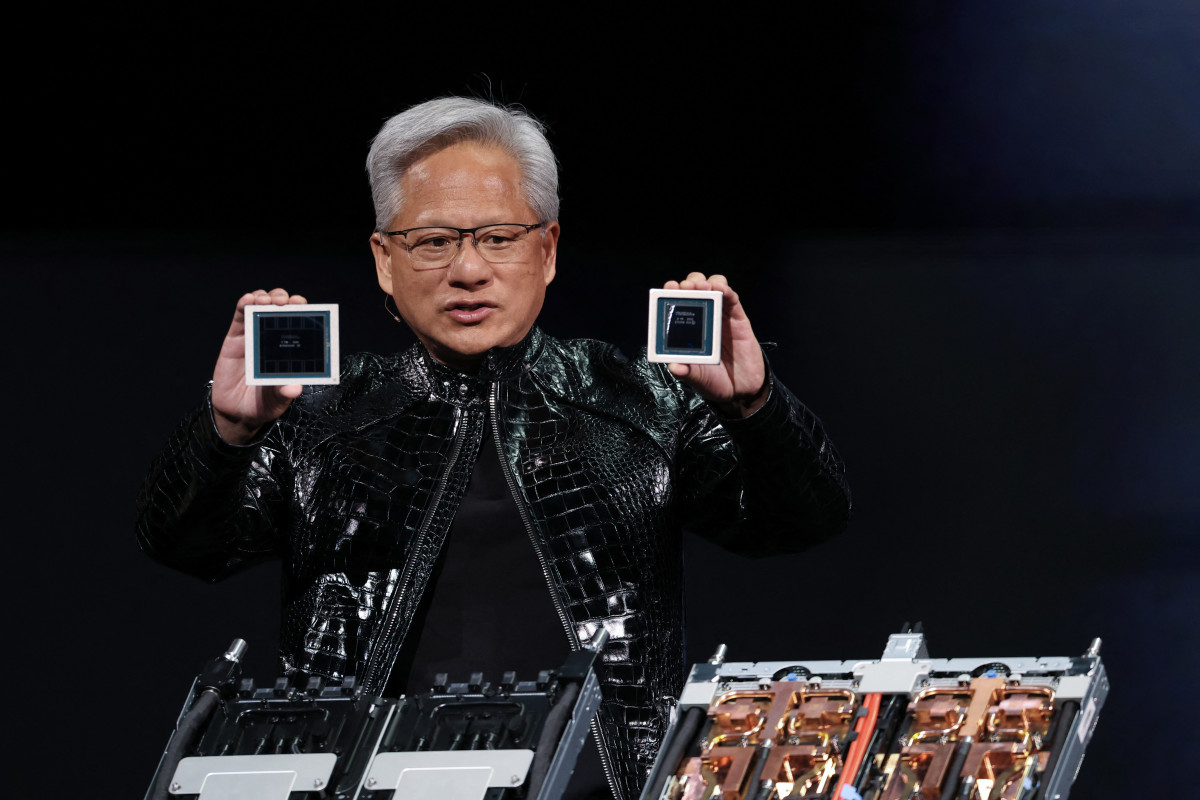

Jensen Huang outlines physical AI as phase, moving intelligence from screens into machines

Jensen Huang outlines physical AI as phase, moving intelligence from screens into machines

Photo by PATRICK T. FALLON on Getty Images

Nvidia is treating robotics like infrastructure, not a side project

Huang’s framed physical AI is a practical, end-to-end pipeline that’s tailor-made to efficiently train machines before they end up in the real world.

More Nvidia:

- Nvidia’s China chip problem isn’t what most investors think

- Jim Cramer issues blunt 5-word verdict on Nvidia stock

- This is how Nvidia keeps customers from switching

- Bank of America makes a surprise call on Nvidia-backed stock

In explaining Nvidia’s powerful approach to physical AI, Jensen Huang said:

In essence, Nvidia is betting that whoever controls the workflow ultimately controls the outcome. Here’s how that vision shaped up at the CES event:

- Simulated worlds before tangible risks:Cosmos Transfer 2.5 and Predict 2.5 can generate physically accurate environments, which then feed into Cosmos Reason 2, enabling robots to see, reason, and act before encountering hardware.

- Humanoids get a real upgrade:Isaac GR00T N1.6 layers vision, language, and action for more comprehensive body control, while Isaac Lab-Arena standardizes large-scale testing.

- Fixing the workflow mess: OSMO layers in data, training, simulation, and evaluation across edge and cloud (linked with Microsoft’s Azure Robotics Accelerator and open-source tools such as Hugging Face).

- Edge hardware that can ship:The Blackwell-based Jetson T4000 delivers 4-times the performance, 1,200 FP4 TFLOPS, and 64GB memory at 70W, with real-world traction from partners like Boston Dynamics, Caterpillar, and LG.

Huang says robotics’ moment has finally arrived

In the interview, Huang said that Nvidia’s big splash into robotics at CES is something the tech giant has been waiting years to execute.

Related: Jim Cramer issues urgent take on oil stocks

He touted the incredible long-term potential of robotics, particularly humanoids, as an idea that had previously felt mostly out of reach.

In his opinion, the difference now is that the enabling technology has finally caught up.

Generative AI can understand and simulate physical actions, as Huang put it, the computer doesn’t know or care what kind of tokens it’s generating,” whether those are words, images, or even finger movements.

That same idea underpins Nvidia’s next-generation Vera Rubin platform, which Huang framed as a significant step change in how AI systems are developed and monetized.

In breaking it down, he described modern systems as “AI factories” where the value boils down to training speed, token expenses, and throughput.

On that front, Huang stated that Rubin delivers a massive4-times leap over Blackwell in terms of training performance, cutting token costs by a factor of 10, spearheaded by energy efficiency, algorithms, and a deeply integrated hardware-software system.

Nvidia thinks reasoning is the missing piece in self-driving

Though robotics hogged all the spotlight, Nvidia’s autonomous-driving announcement was equally consequential.

Related: Veteran analyst delivers blunt 3-word take on Tesla after report

Nvidia showcased Alpamayo, a brand-new set of open “reasoning” models, simulation tools, and datasets that aim to finally solve those pesky real-world cases that continue to hold back self-driving technology.

Nvidia’s argument is that Alpamayo employs a reasoning-based vision-language-action model specifically designed to handle situations at every step.

The launch pits Nvidia into what has become a hotly competitive field that’s crowded and far from solved:

- Tesla is developing Full Self-Driving (Supervised) as an end-to-end autonomous stack.

- Waymo is moving into the foundation “world models” along with large-scale simulation.

- Mobileye specializes in consumer autonomous vehicles through SuperVision and Chauffeur, supported by safety-first frameworks.

- Aurora targets Level 4 freight, utilizing AI to identify rare edge cases a lot more quickly.

Furthermore, Nvidia’s potent DRIVE Hyperion platform is also gaining a ton of traction, with the first passenger car fitted with Alpamayo’s system set to reach roads with the Mercedes-Benz CLA.

Additionally, Alpamayo is supported by more than 1,700 hours of varied driving data, enabling the effective training and testing of autonomous systems.

Related: Goldman Sachs stuns with Taiwan Semiconductor stock price target